Billions of people around the world use social media corporations like Facebook, X/Twitter, Instagram, YouTube and TikTok, and they speak an enormous number of different languages. Yet, the platforms are not treating people equitably, seemingly prioritising some countries and languages over others.

Evidence of this emerged through the internal Facebook files that were leaked by Frances Haugen in 2021. They revealed that the platform spent a whopping 87% of its budget for combating misinformation on English language content despite the fact that only 9% of its users were English-speaking at the time.

New reports just published by the platforms give us an opportunity to further dig into this concern in Europe. The EU’s Digital Service Act requires all big social media platforms to publish twice yearly reports that include data on the human resources dedicated to content moderation, broken down into each of the EU’s official languages . The first tranche of reports was published in November 2023 and so we were curious to take a look at Facebook, Instagram, X, TikTok, YouTube, Snapchat, Pinterest and LinkedIn.

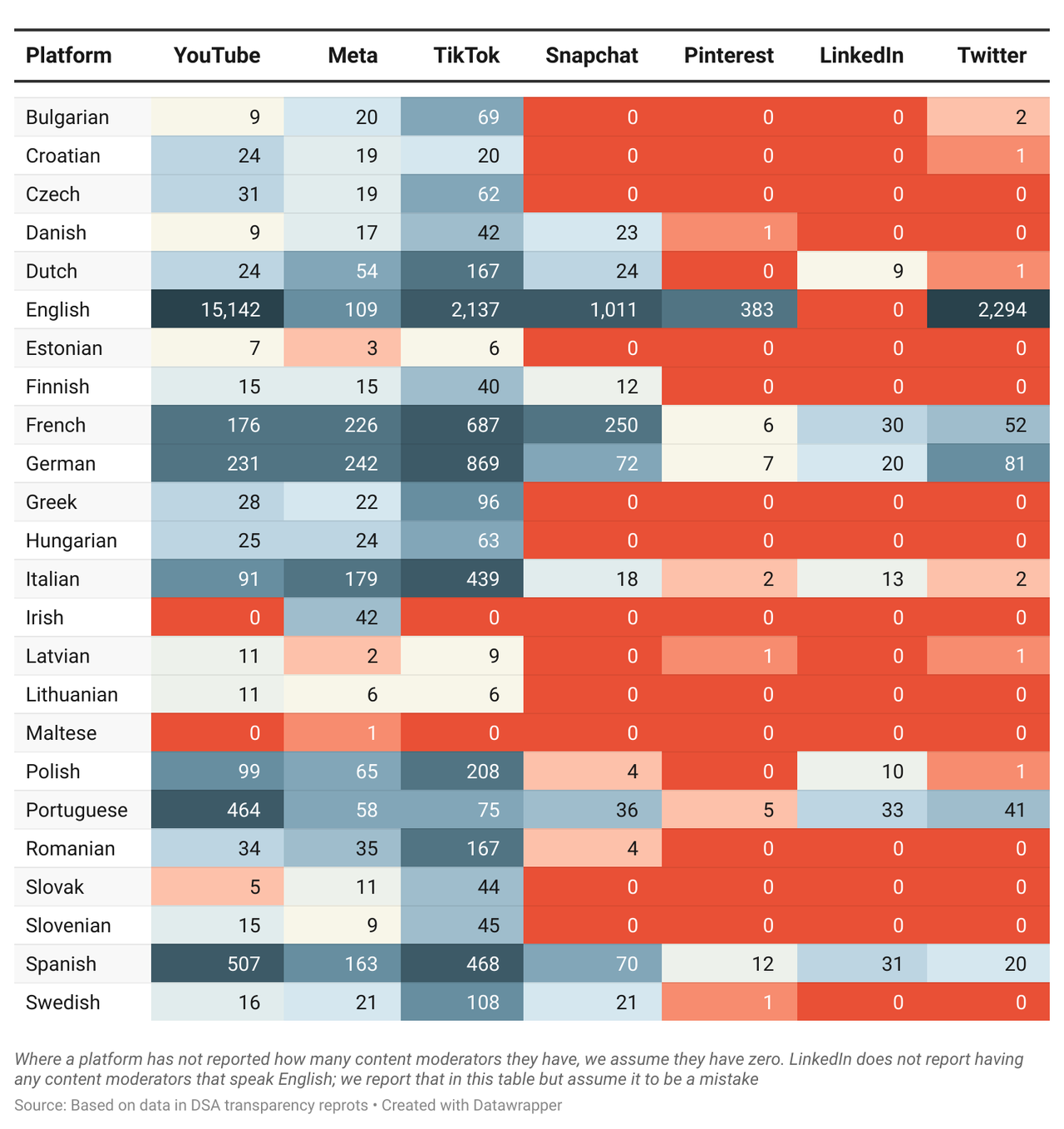

What we found raises concerns about how some of the platforms treat users who don’t post in English. The table below shows the numbers of content moderators reported by each of the platforms in each EU language. While care is needed in interpreting the numbers as they are not all comparable to each other (see below), there are some key insights we can draw from these reports, as we will now discuss. This has important ramifications for a whole range of threats stemming from social media – including how hate speech, incitement to violence and disinformation get detected and moderated.

The EU’s transparency requirements for Big Tech are too lax

Our first finding from looking through all the reports is that differences in how the companies have reported their content moderation efforts makes assessing their efforts tricky and comparing efforts across platforms even more difficult.

In particular:

- Platforms vary in whether they have provided global or EU-specific content moderation figures. Meta makes it clear that the numbers they report are for reviewers “who work on EU-specific content across EU official languages” and that there are additional reviewers who review content in languages that are widely spoken outside the EU. Google states that content on YouTube “can be posted by users globally or reviewed by content moderators located globally.” Other platforms don’t make it clear whether the numbers they report are global or EU specific.

- Platforms vary in whether they state the number of moderators who are proficient in each language or who have moderated content in each language. For example, X provides figures on the number of people who are proficient in the ‘commonly spoken’ languages in the EU whereas YouTube provides figures on “the number of content moderators who reviewed at least 10 videos” over a two-week period in each of the languages. Google also states that “translation tools may be used to assist in the review process.” In other words, YouTube’s numbers say nothing about the number of moderators they have who are actually proficient in different languages. This prevents YouTube’s figures from being meaningfully compared to other platforms’.

- The total number of content moderators is often not clear because where content moderators speak more than one language, some platforms include them in more than one row.

Requiring platforms to be more transparent is never going to lead to more accountability if the information is incomplete and published in a way that makes comparisons difficult or impossible.

The European Commission’s upcoming mandatory template for reporting must address these shortcomings.

TikTok appears to have a lot more human content moderators than Facebook and Instagram in the EU

Despite the issues with the data described above, there are some broad patterns that can be drawn from the first set of transparency reports.

TikTok appears to have approximately four times as many human content moderators working on EU content than Facebook and Instagram do. TikTok estimates that they have 5,730 content moderators “who are dedicated to moderating content in the European Union” [1]. Meta estimates that Facebook and Instagram have approximately 1,362 content moderators [2] who “work on EU-specific content across EU official languages”.

Even though there may be some differences in the way the platforms have come to these figures, the difference between the two platforms is so big, and Facebook and Instagram each have roughly twice as many users in the EU as TikTok that it implies a real underlying difference in the resource that each platform puts into human content moderation in the EU.

Content moderation often appears to be dominated by English

On both X and Pinterest, only 8% of the content moderators are proficient in an official EU language that is not English. On YouTube, only 11% of EU language content moderators (who reviewed at least 10 videos) reviewed content that wasn’t in English. Some of the explanation for these extreme differences lies in the fact that the English language moderators are presumably moderating a lot of content from outside the EU so there needs to be more of them than for languages predominantly spoken in the EU.

French, Spanish and Portuguese are underrepresented, especially on X and YouTube

One of the challenges of interpreting figures on content moderators who speak different languages is that some of the official EU languages are mostly spoken within the EU, whereas others – especially English, French, Spanish and Portuguese – are spoken globally.

Given this, while the number of moderators who speak English is often very high (see above), the numbers who moderate French, Spanish and Portuguese are particularly low, especially on X and YouTube, as shown in the graphs below.

Some platforms have no content moderators for some European languages

All the social media platforms that we looked at, except Facebook and Instagram, lack human content moderators in some of the official EU languages.

For example, neither X nor Snapchat have a single content moderator who can speak Estonian, Greek, Hungarian, Irish, Lithuanian, Maltese, Slovak, Slovenian, and in addition X also does not have any content moderators who can speak Czech, Danish, Finnish, Romanian, or Swedish.

LinkedIn reports that it has moderators who are proficient in just seven official EU languages. It discloses that it has zero moderators for Czech, Danish, Romanian, and Swedish, and fails to report any data for the other 13 EU languages, with the excuse that these are not languages currently supported by its website. One of the languages that they failed to report on was English, even though it is the official language of Ireland and Malta.

Inconsistencies, inequities and a toxic business model

Our analysis of social media corporations’ EU transparency reports show that many of them are failing to properly invest in safeguards for millions of users across Europe, especially in eastern Europe.

As some of the wealthiest companies in the world, the failure of these leading social media corporations to properly invest in content moderation in Europe is deeply concerning. With important elections taking place in Europe over the next year, including for the European Parliament and in Austria, Belgium, Croatia, Finland, Lithuania, Portugal, Romania and Slovakia, this resource gap can have serious implications for platforms’ ability to put in place adequate checks and balances against hate and disinformation.

Platforms such as X/Twitter and Meta have reportedly made cuts to their content moderation teams. In addition, the situation is only likely to be worse in other parts of the non-English speaking world, where there is less regulatory oversight.

As our own investigations have shown, Facebook, YouTube and TikTok’s content moderation via their automated and human checks have failed to detect content that wildly violates their own policies.

Relying on translations to moderate content is not good enough; moderators need a cultural and political understanding of the country they’re working on. It is difficult to see how a platform could expect to live up to the Digital Services Act requirement to mitigate risks to elections without moderators who understand these countries and speak their languages. The EU has a real opportunity to hold platforms to account on how they moderate content. But the first step that’s needed is to make sure that the transparency that’s required of platforms is meaningful.

However, we know that content moderation is merely a sticking plaster as long as these corporations continue to rely on an engagement-based and profit-hungry business model. These models prioritise maximising the time people spend on their platforms and the content they engage with, in order to better collect data on users and serve more adverts to them even if there is wide-ranging evidence that this promotes the spread of hate and disinformation. Ensuring greater and more equitable investment in content moderators and ensuring transparency is crucial in the short-term, but in the long-term, we need a paradigm shift in how Big Tech does business so that together we can build an online world that connects rather than divides us.

[1] TikTok reports that they have 6,125 content moderators dedicated to content in the EU, and that this figure includes 395 non-language-specific moderators. The non-language specific moderators have been subtracted from the figure stated above in order to make it more comparable to Meta which lists the number of ‘language agnostic’ reviewers globally.

[2] This number is only approximate as it is a total of all the content moderators they list for each EU official language, and therefore possibly double counts some people.