For many people, social media is not safe. Large social media companies employ a business model that disrupts our democracies, leading to real-world harm.

Over the last year, companies such as Meta and X / Twitter have made significant cuts, including to teams responsible for election safety and human rights. Like others, we fear that underinvestment in user safety will negatively impact the spread of election disinformation and hate speech which has been reported incite violence and even genocide.

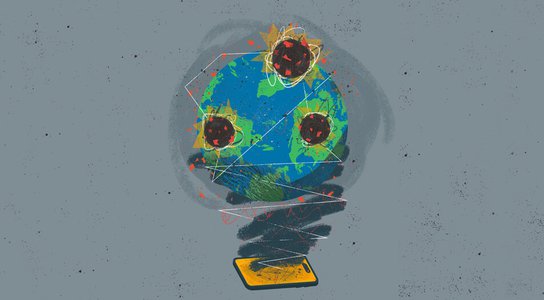

In 2024, we’re predicted to see 65 elections globally, involving billions of voters in countries with diverse democratic landscapes, from the EU, India, Mexico, and Indonesia, to Tunisia, Ethiopia, and Egypt. In light of this critical year for global democracies, it has never been more urgent for social media platforms to protect their users from the inevitable onslaught of online hate and disinformation.

January 2021: Pro-Trump supporters riot at the Capitol Building, Washington DC. Credit: Pacific Press Media Corp/Alamy Live News.

Our investigations

Following widespread pressure ahead of the 2020 US elections, social media companies claimed they had improved their safety policies, particularly in relation to advertising standards. We wanted to put this to the test.

In 2021, we started a new series of investigations to understand if large social media companies – Meta / Facebook, Google / YouTube, Twitter / X, TikTok – were enforcing their own policies in critical periods, such as during elections, unrest, and conflict.

We wanted to focus on their policies related to advertising content, the profit-making centre of their business and where they profess to be applying rigorous content moderation practices. We thought the results would also provide us with an indication of the companies’ ability to identify and moderate hate speech across the rest of their platforms too.

Our investigations follow the same methodology: uploading adverts containing often real-life examples of extreme hate speech or disinformation, sometimes targeting vulnerable communities, to test if the platforms approve the adverts for publication. We follow a rigorous process where adverts are never published. Tests are also made as easy as possible for the platforms to detect, with all the examples wildly breaching their own policies and written in clear language.

The results

So far, we have carried out over ten investigations in countries such as Brazil, Ethiopia, Ireland, Kenya, Myanmar, Norway, South Africa, the UK, and the USA, all with similar results that display social media platforms’ failure to implement their own policies when it comes to hate speech and disinformation.

We believe our findings indicate that these companies would prefer to protect their profitmaking business model than properly resource content moderation and protect the human rights of users. This is even more unsettling when we see inequalities in policies and enforcement across different countries, with companies appearing to put more effort into content moderation for users in the US than users in the other countries we’ve examined. This is a global equity issue as social media users should not be more at risk of political manipulation or hate speech depending on where in the world they are.

All these investigations are now available to view on an interactive map where you can jump between countries and compare results. This new tool allows you to explore our range of tests, gain a deeper insight into the scale of the issue, and track our progress as we update the map with our latest investigations.

2024 – a critical juncture for global democracy and social media platforms

On International Day of Democracy 2023, we have joined forces with a new movement called the Global Coalition for Tech Justice. Together with 149 partners worldwide, we will be stepping up campaigning efforts to demand that large social media companies invest in safeguarding the 2024 elections, especially for the overlooked global majority.

Whilst the outlook seems bleak, change is possible. To quote Facebook whistle-blower Frances Haugen, these billion-dollar companies ‘choose profit over safety’. But we still believe that it is within their power to make platforms safer for us all. In the short term this means investment in content moderation during critical periods in order to ensure users are not exposed to online hate and disinformation via their platforms. In the long term, this means changing their business model to embed safety by design, no longer profiting from boosting hateful content or amplifying conspiracy and lies.