Reacting to the news that McCue Jury & Partners filed new lawsuits against Facebook in relation to Myanmar, Naomi Hirst, Head of the Digital Threats Campaign at Global Witness, said:

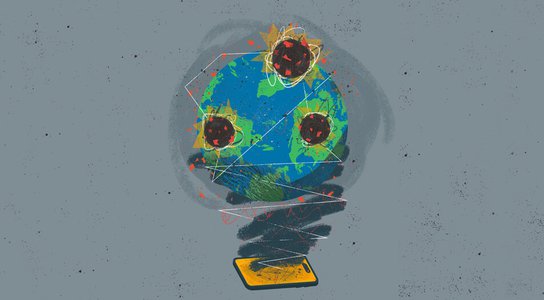

“Global Witness’ research found that Facebook’s own recommendation algorithm directed users towards content that incited violence, pushed misinformation and glorified the military’s abuses, despite the company having declared the situation in Myanmar to be an emergency where they were doing everything they could to keep people safe.

“Our findings suggest that making Facebook a safe space involves far more than finding and taking down content that breaches its terms of service. Rather, it requires a fundamental change to the algorithms that recommend dangerous and hateful messages, and shows the need for governments to legislate to hold Big Tech accountable for amplifying inflammatory content.”