Op-ed by Sherylle Dass, Regional Director of the Legal Resources Centre and a Steering Group member of the Global Coalition for Tech Justice.

Originally published in the Daily Maverick on 15 September 2023.

As South Africa prepares to vote next year in a highly contested general election, the largely unchecked power of Big Tech platforms in influencing the democratic process must be a key issue on the agenda.

In the context of ongoing corruption crises, rising anti-migrant rhetoric and anti-human-rights movements, and threats to press freedom, the role of social media companies may seem like a lesser priority, but in fact, it is a crucial part of the picture. People’s rights and freedoms offline are being jeopardised by online platforms’ current business model, where profit is made from stoking up anger and fear.

At the South African human rights organisation where I work, the Legal Resources Centre, we are seeing an escalation of xenophobic violence that is often incited on social media.

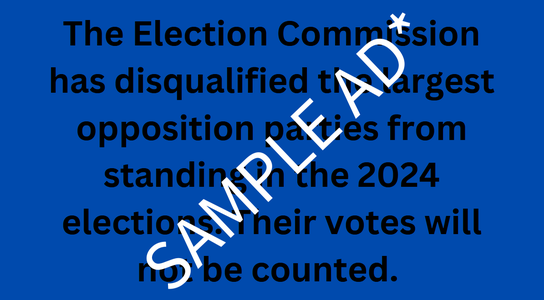

A recent joint investigation we conducted with international NGO Global Witness showed that Facebook, TikTok and YouTube all failed to enforce their own policies on hate speech and incitement to violence by approving adverts that included calls on the police in South Africa to kill foreigners, referred to non-South African nationals as a “disease”, as well as incited violence through “force” against migrants.

JUNE 2023: MASS MARCH AGAINST ILLEGAL IMMIGRATION IN SOUTH AFRICA, INCLUDING OPERATION DUDULA. CREDIT: ALET PRETORIUS / GALLO IMAGES / GETTY IMAGES

As the elections draw closer and xenophobic rhetoric is likely to be used as a political ploy, the supercharged climate of electoral campaigning risks further amplifying the threat and impact of political conflict incited through online hate speech and disinformation.

This is not just a South African issue, but a global one. Next year we are due to see 65 elections globally, involving billions of voters. This will include countries with diverse democratic landscapes such as the EU, Egypt, Ethiopia, India, Indonesia, Mexico, Tunisia and the US.

If the level of investment in electoral safeguarding is decreasing even in the US context where these companies have received huge amounts of political and media scrutiny, it paints a worrying picture for countries in the global majority and non-English language markets.

Despite the attention that scandals like Cambridge Analytica, potential Russian interference in the 2016 US elections, and the role of social media in the deadly 2020 Capitol insurrection in the US, other Global Witness investigations have revealed that platforms frequently fail to enforce their existing policies, raising serious questions about the efficacy of their moderation systems and their willingness to stem online hate.

The already dire situation risks deteriorating further, given that, over the last year, both Meta and X/Twitter dramatically cut their trust and safety teams in the US and globally.

If the level of investment in electoral safeguarding is decreasing even in the US context where these companies have received huge amounts of political and media scrutiny, it paints a worrying picture for countries in the global majority and non-English language markets.

We believe that companies tend to invest less in these countries and moderators have shown to be overstretched, underpaid, and traumatised. This is an issue of fairness as users should not be more at risk of online harms depending on where in the world they are.

It is incumbent on platforms to stem the tide of hate and disinformation. They must be forced to learn from past failures, failures which have proved devastating in a range of global majority countries.

For example, whistleblower Frances Haugen exposed Facebook’s complicity in “literally fanning ethnic violence” by failing to address online hate speech in Ethiopia that reportedly escalated into conflict and deaths.

This came after Facebook’s admission in 2018 that the platform was used to “incite offline violence” during the Rohingya genocide in Myanmar where thousands are reported to have been killed and hundreds of thousands to have been displaced.

Yet, despite these revelations and contrary to their policies on hate speech and incitement to violence, the problems on these platforms persist.

As billions go to the polls in 2024, the onus is on Big Tech companies to rise to the occasion and put people before profit everywhere they operate.

A series of 2022 Global Witness investigations showed that ahead of elections in Kenya, Facebook adverts containing hate speech and ethnic-based calls to violence were approved, and in the run-up to the elections in Brazil, adverts containing election disinformation were also given the green light.

This is why, on International Day of Democracy, 15 September, we’re joining forces with 149 partners worldwide to start a new movement called the Global Coalition for Tech Justice. As a collective, we will be stepping up campaigning efforts to demand that large social media companies invest in safeguarding our elections and tackling incitement of hate and violence, especially for the overlooked global majority.

These platforms must implement election plans that assess risk and mitigate harms: de-amplify hate and disinformation; invest in content moderation across all languages; stop microtargeting; and meaningfully engage partners such as civil society, researchers, fact-checkers, independent media, and others who protect election integrity.

As billions go to the polls in 2024, the onus is on Big Tech companies to rise to the occasion and put people before profit everywhere they operate. However, we cannot rely on these companies to act alone — we also need government regulation.

In South Africa, while our progressive Constitution and laws may provide an avenue to protect social media users’ privacy and data, there is currently no legal recourse to hold social media companies accountable for the real-life harms caused by the promotion and spread of hate speech, incitement to violence and disinformation on their platforms.

For this, we urgently need strong legislative reforms, similar to recent EU legislation, that ensure that Big Tech companies are fully transparent about their business models and create mechanisms to hold them accountable for harms caused by their platforms.

The stakes for South African and global democracy are too high for anything less.