London/Washington, D.C. – 28 June 2022. A new investigation by Global Witness and legal non-profit Foxglove shows that Facebook failed to detect inflammatory and violent hate speech ads in the two official languages of Kenya – Swahili and English.

Despite the high risk of violence ahead of the Kenyan national election next month, Facebook approved hate speech ads promoting ethnic violence and calling for rape, slaughter and beheading.

“It is appalling that Facebook continues to approve hate speech ads that incite violence and fan ethnic tensions on its platform,” said Nienke Palstra, Senior Campaigner in the Digital Threats to Democracy Campaign at Global Witness. “In the lead up to a high stakes election in Kenya, Facebook claims its systems are even more primed for safety – but our investigation once again shows Facebook’s staggering inability to detect hate speech ads on its platform”.

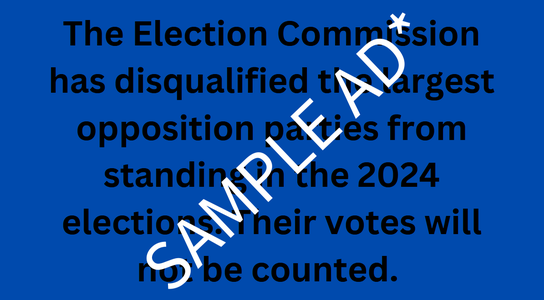

We submitted ten real-life hate speech examples translated into both English and Swahili — for a total of 20 ads — and Facebook swiftly approved all ads with the exception of the first three English-language ads which they said violated their Grammar and Profanity Policy. After making minor grammar changes and removing several profane words, Facebook approved the ads even though they contained clear hate speech.

Previous Global Witness and Foxglove investigations in Myanmar and Ethiopia show that Facebook also failed to detect explicit Burmese and Amharic-language hate speech ads calling for violence and genocide in those countries, despite its public commitments to do so and admission that Facebook has been used to incite violence.

This investigation into Kenyan hate speech ads for the first time raises serious questions about Facebook’s content moderation capabilities in English as Facebook failed to detect English-language hate speech.

When asked for comment on the investigation findings, a Meta spokesperson — Facebook’s parent company — responded to Global Witness that they’ve taken “extensive steps” to help Meta “catch hate speech and inflammatory content in Kenya” and that they’re “intensifying these efforts ahead of the election”. They state Meta has “dedicated teams of Swahili speakers and proactive detection technology to help us remove harmful content quickly and at scale”. Meta acknowledges that there will be instances where they miss things and take down content in error, “as both machines and people make mistakes.”

After we alerted them to our investigation, Meta then put out a new public statement on its preparations ahead of the Kenya elections — specifically highlighting their apparent action taken to remove hateful content in the country — we then submitted resubmitted two ads to see if Facebook had indeed made improvement to its detection of hate speech – but, once again, the hate speech ads in Swahili and English were approved.

“Facebook claims to have ‘super-efficient AI models to detect hate speech’, but our findings are a stark reminder of the continued risk of hate and incitement to violence on their platform,” said Palstra. “As one of the world’s most powerful companies, instead of releasing empty PR-led statements, Facebook must make real and meaningful investments and changes to keep hate speech off its platform.”

Cori Crider, a Director at Foxglove, said: "First it was Myanmar, then Ethiopia, now Kenya. Enough is enough. We're just days out from Kenya's election and poison is still getting through. Facebook should be catching and stopping hate, not waving it onto the track like a Formula One flagman.

"The Global Witness-Foxglove investigations should explode once and for all the myth that 'AI' can solve the problem of global content moderation. Automated hate filters can't make up for Facebook's woeful failure to hire enough moderators or to value their work. That failure has helped create the awful results we see today.

"It’s up to lawmakers around the world to force Facebook to make huge changes – beginning with a massive global investment in human content moderation and comprehensive mental health support for every moderator.”

Global Witness and Foxglove call on Facebook to:

- Urgently increase the content moderation capabilities and integrity systems deployed to mitigate risk before, during and after the upcoming Kenyan election.

- Properly resource content moderation in all the countries in which they operate around the world, including providing paying content moderators a fair wage, allowing them to unionise and providing psychological support.

- Routinely assess, mitigate and publish the risks that their services impact on people’s human rights and other societal level harms in all countries in which they operate.

- Publish information on what steps they’ve taken in each country and for each language to keep users safe from online hate.